This guide explains how to use the Experiment Builder tool in Gorilla to design and manage your experiments. The Experiment Builder is where you combine all of the tasks and questionnaires you have created into a single flow for participants to complete.

For a walkthrough on creating experiments in Gorilla, check out our Step-by-Step guide.

If you can't find an answer to your question in the topics in the menu, please get in touch with us via our contact form. We are always happy to help! Simply tell us a little about what you are trying to achieve and where you are getting stuck.

In Gorilla you create Experiments using the Experiment Tree.

Gorilla uses a graphical drag-and-drop interface to represent your Experiments, which take the form of a tree or flowchart.

You create Experiments by combining together your Questionnaires and Tasks as 'Nodes' which you link together to form your experiment tree.

A simple experiment may consist of a consent Questionnaire, a demographics Questionnaire and a test Task.

For a more advanced experiment, there are also powerful Control Nodes such as the Randomiser Node, Branch Node, and Order Node, that support complex experimental designs, all without touching a single line of code!

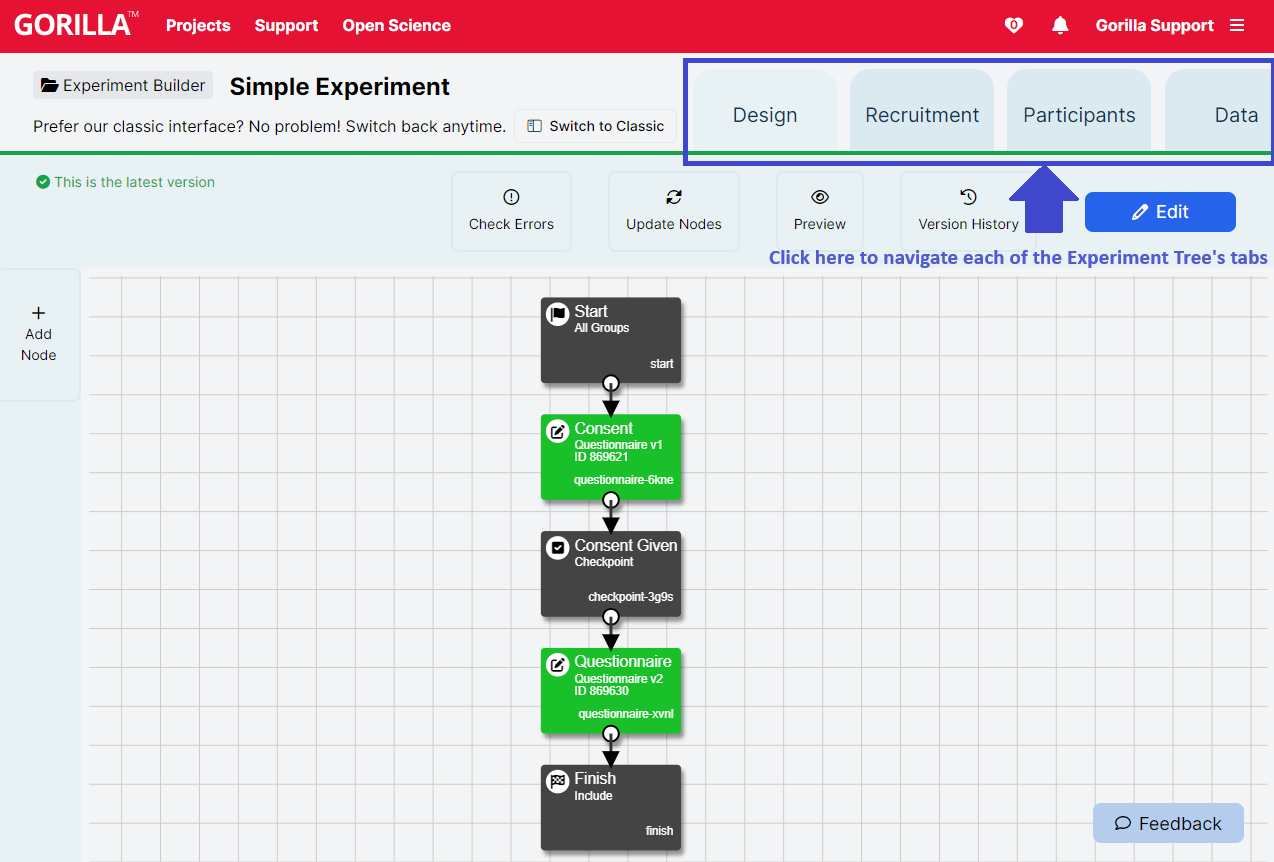

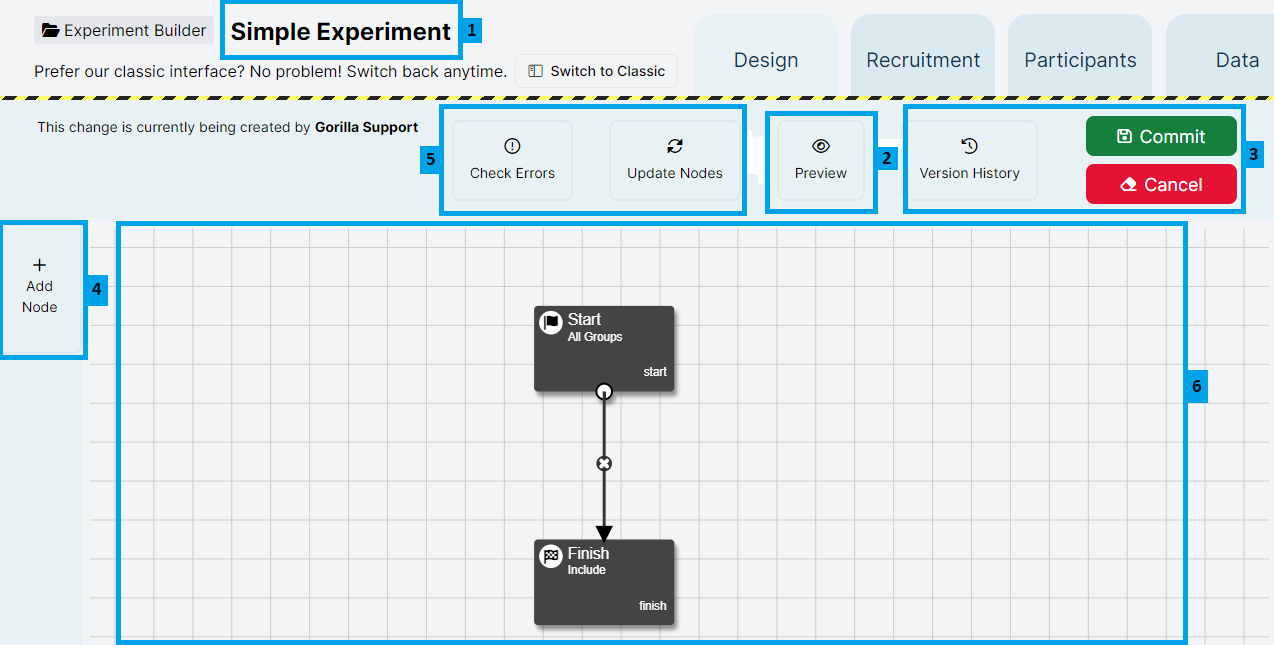

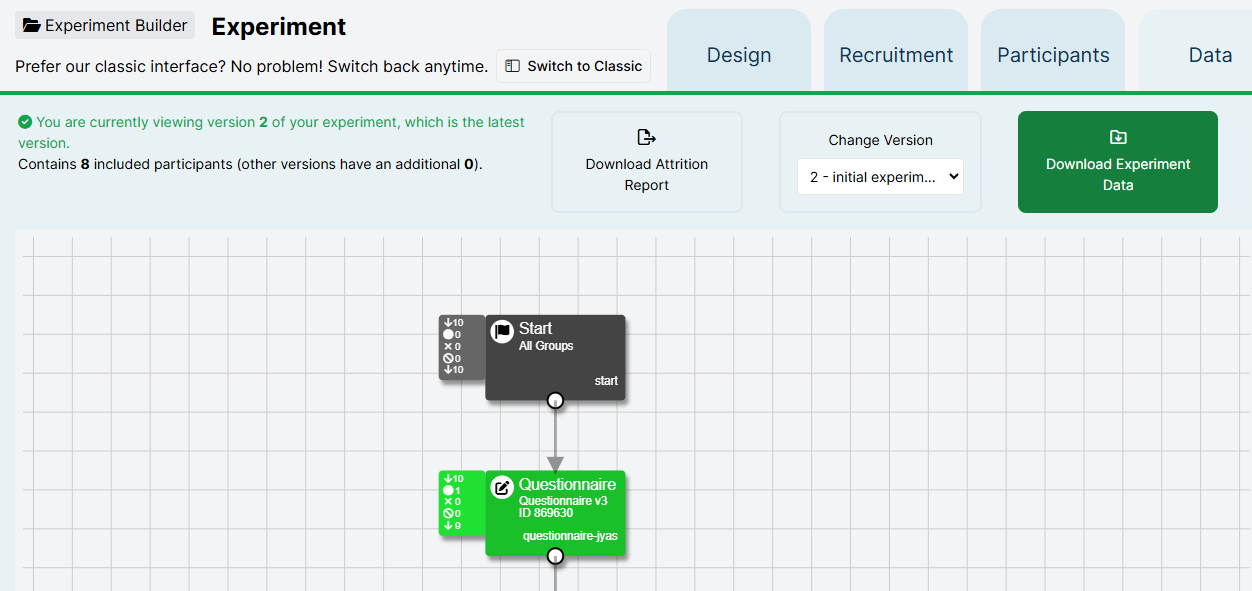

This screenshot shows the new Experiment Builder interface. If you’re still using the classic interface, it will appear slightly different.

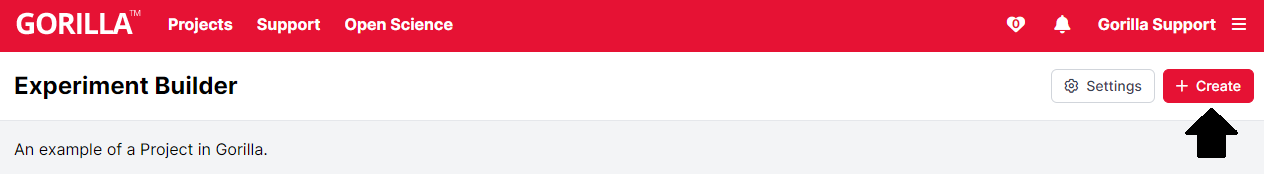

A new Gorilla Experiment can be created within a Project by scrolling to the Experiments section and clicking the 'Create New' button.

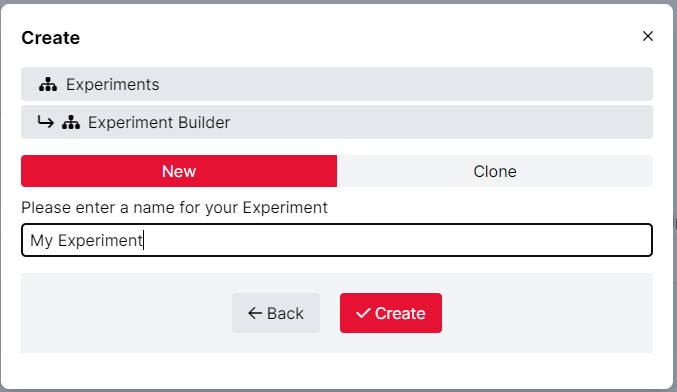

With the 'Create New' option selected, enter a name for your new Experiment and press Create. Alternatively, you can also decide to use an existing experiment by Cloning it.

You will then be redirected to the Design Tab for your newly created Experiment.

You can learn more about the Experiment Builder interface here.

When choosing a name for your Experiment, try to make it something unique and memorable - a name you would easily associate with the Experiment's content.

You will use this name to identify your Experiment in your project. It is also the name people will see if you collaborate with, or send your Experiment to, someone, so its important that they are be able to recognise it easily, too!

In the Classic Experiment Builder, you can also add a description to your Experiment via the Description option in the Settings menu. This description will then be visible from the project overview screen. You can use this feature to add a short reminder of what your Experiment is about or leave a progress message to yourself or collaborators. Descriptions are limited to 1000 characters in length.

Adding a description is not yet available in New Experiment Builder. To add or change a description, use the 'Switch to Classic' button to switch back to Classic Experiment Builder, make the change, then switch back to New Experiment Builder.

The Experiment Tree interface is divided into 4 major sections each found in a separate Tab:

Each major section represents a stage in your overall experimental design. Usually, you will progress through each of these sections one after another from left to right.

When you first enter an Experiment you will be presented with the Design Tab as it is shown in the images below. The Design Tab is where you create your experimental design.

From this page you can navigate to any of the tabs for your experiment.

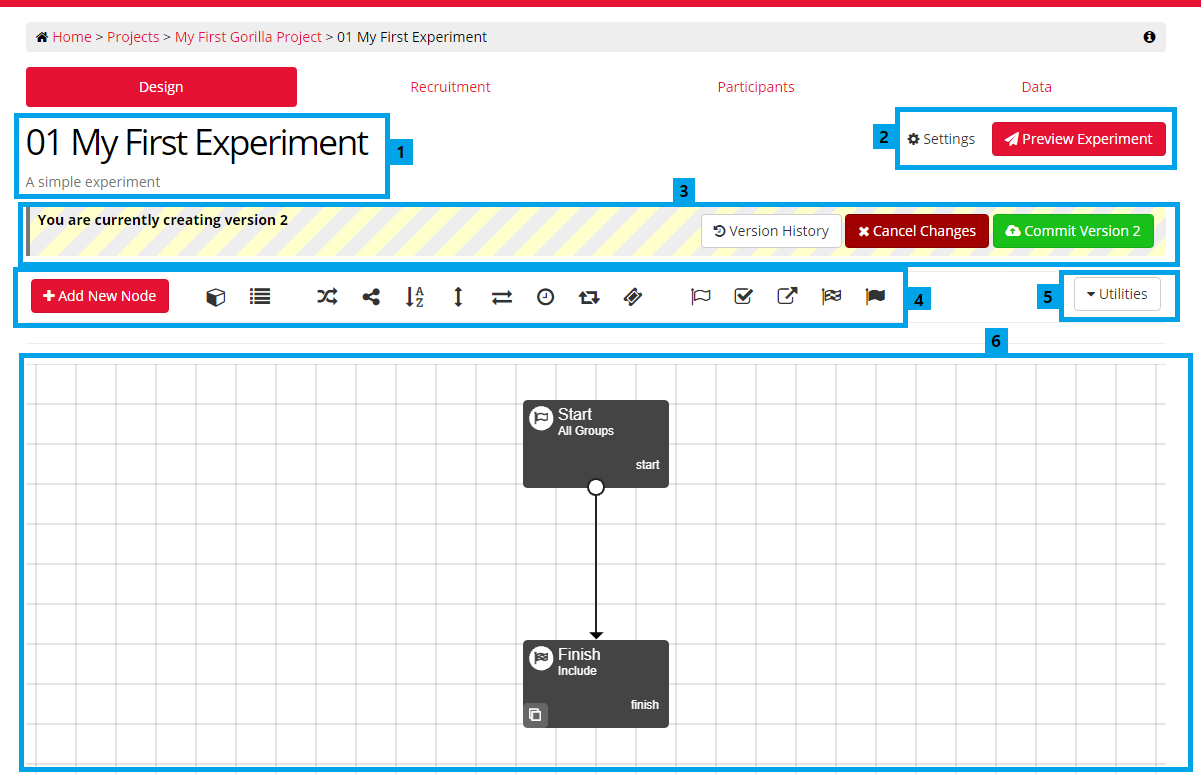

The images below show the Design Tab of the Experiment Tree, with an example of a simple experiment. The Design Tab will appear slightly different depending on whether you are using New Experiment Builder or Classic Experiment Builder.

New Experiment Builder:

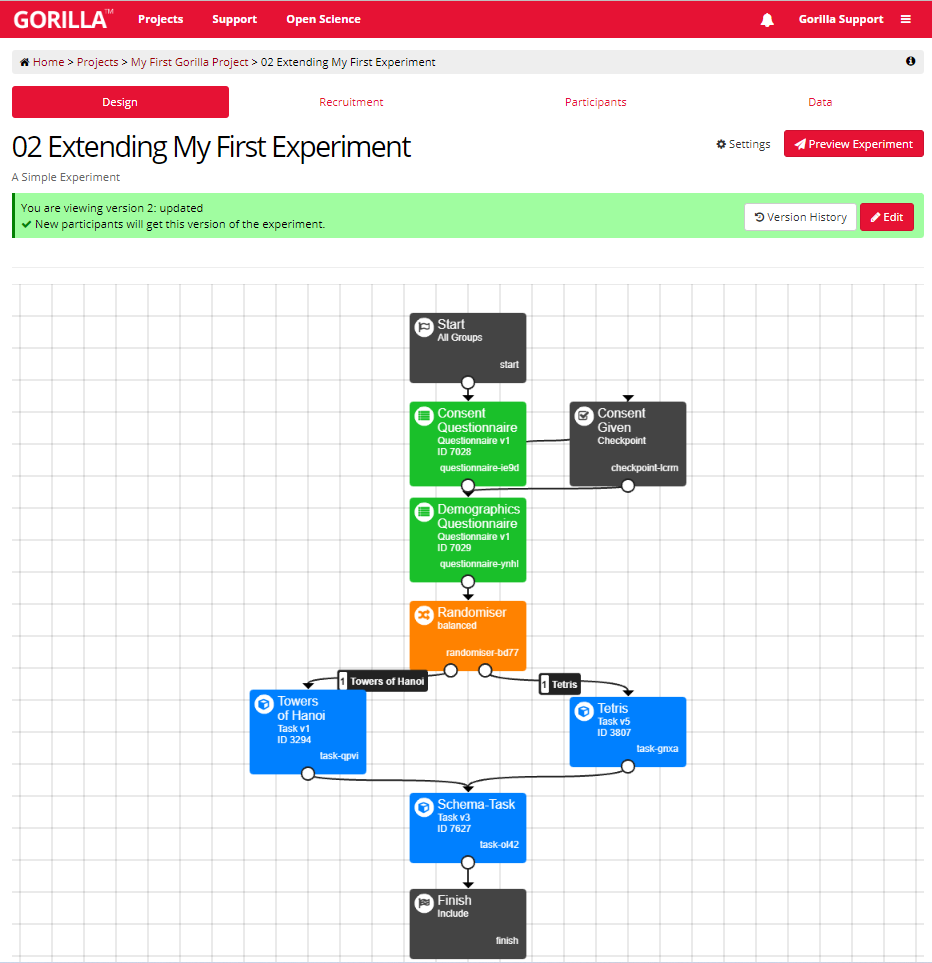

Classic Experiment Builder:

You can now learn how to use the Experiment Tree to design and build a simple Experiment, like the one in the example above.

In Gorilla you build your Experiments in the Design Tab of the Experiment Tree. The Design Tab will appear slightly different depending on whether you are using New Experiment Builder or Classic Experiment Builder.

New Experiment Builder:

Classic Experiment Builder:

In Gorilla you build your Experiments in the Design Tab of the Experiment Tree.

When you create a new experiment for the first time you'll notice that the experiment tree already contains two Nodes: a Start Node and a Finish Node.

When building a new experiment, the first step is to add some more Nodes. Experiment Tree Nodes are the building blocks of experiment creation. You can find out more about different types of nodes in What are Nodes? section.

For all types of node, the process to add them is the same. You will need to follow slightly different steps depending on whether you're using our New Experiment Builder or Classic Experiment Builder.

New Experiment Builder:

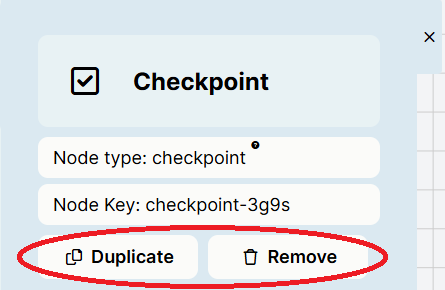

To make a copy of a Node, click the Node and then click the 'Duplicate' button in the Node settings. To remove a Node, click the Node and then click the 'Remove' button in the Node settings.

Classic Experiment Builder:

To make a copy of a Node, click the Duplicate icon at its bottom left corner.

To remove a Node, click the Node and then click the 'Remove' button.

Experiment Tree Nodes are the building blocks of your experiment. They fall into three categories: Core Nodes, Study Nodes, and Control Nodes. Click on the name of each Node for more detailed information about each Node and how to set them up.

These control how your participants enter, progress through, and exit the experiment.

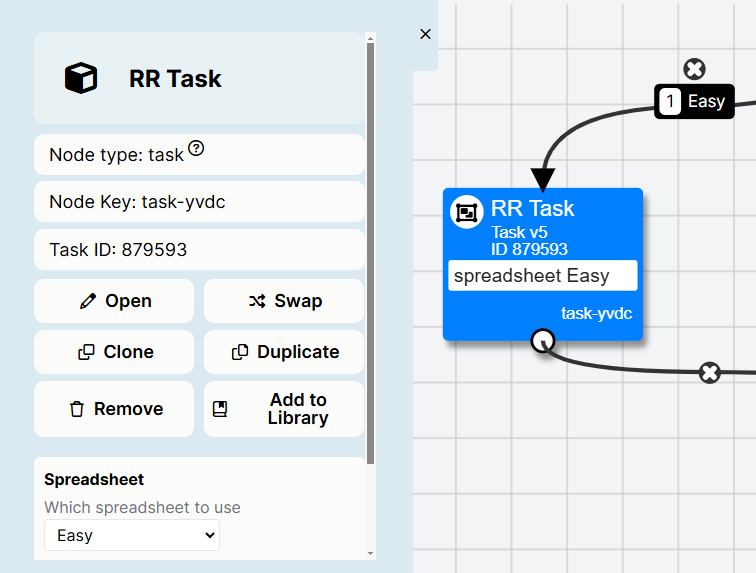

These are the tasks, questionnaires, games, or shops you want your participants to take part in. By clicking the node in the Experiment Tree, you can configure any manipulations to customise your study nodes for different experimental conditions.

If you make changes to your tasks or questionnaires after adding them to the Experiment Tree, you will need to update them to the latest versions.

These allow you to manipulate the path participants take through your experiment, for example by randomising them to different conditions or having them repeat a task.

By adding these nodes to your Experiment Tree and linking them together in different ways, you can perform randomisation, branching, counterbalancing, or even build a longitudinal study, all without touching a line of code.

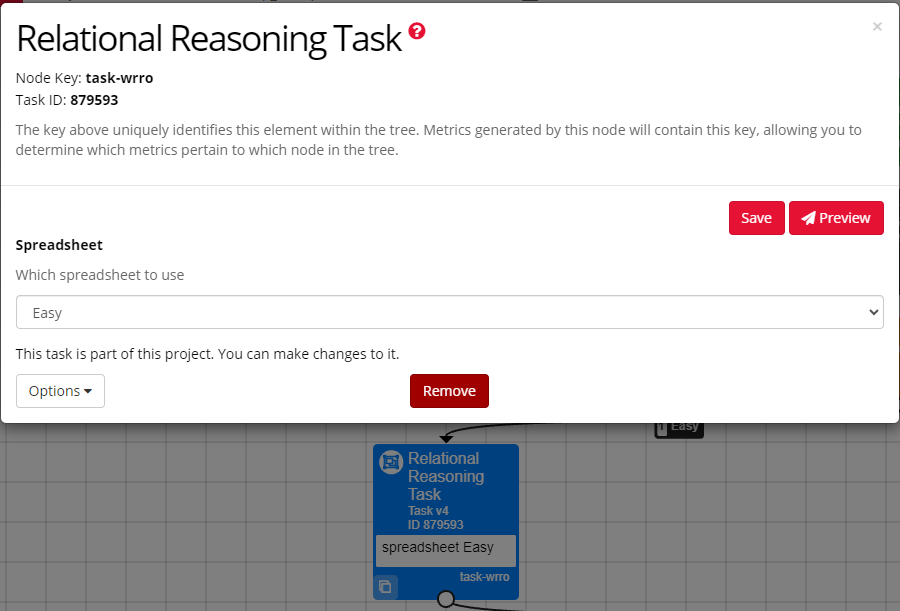

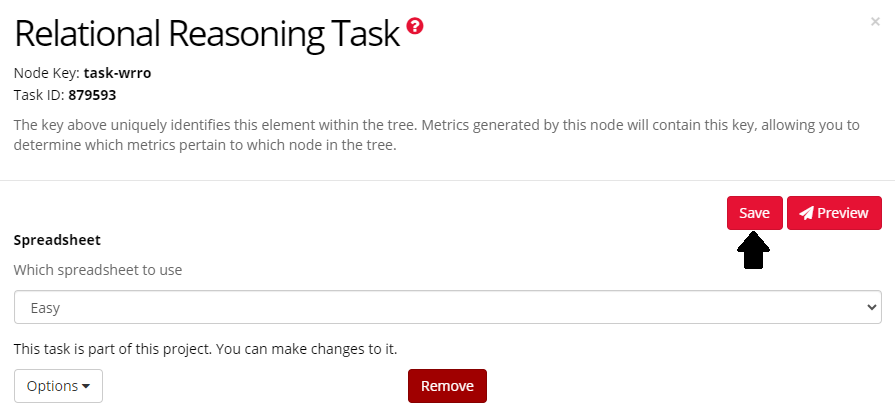

To change the configuration settings on any Node, click the Node in the experiment tree. This will open the Node Settings. The Node Settings and how you interact with them will be slightly different depending on whether you are using New Experiment Builder or Classic Experiment Builder.

New Experiment Builder:

For all nodes, you can:

You can access both these options quickly by right-clicking on the node and selecting 'Duplicate' or 'Remove'.

For Study Nodes (tasks, questionnaires, games, or Shop Builder tasks), you can also:

When you have made any changes to Node settings, scroll down and click 'Save Settings' to save these changes:

Core and Control Nodes have other settings available, depending on the node type. You can find more information about the settings available for different Nodes in our Experiment Tree Nodes Reference Guide.

Classic Experiment Builder:

For all nodes, you can:

For Study Nodes (tasks, questionnaires, games, or Shop Builder tasks), you can also:

When you have made any changes to Node settings, click 'Save' to save these changes:

Core and Control Nodes have other settings available, depending on the node type. You can find more information about the settings available for different Nodes in our Experiment Tree Nodes Reference Guide.

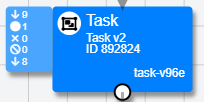

It is very important to keep your Study Nodes (Tasks, Questionnaires, etc.) up-to-date so that participants will take part in the latest version of your experiment. The Study Nodes in your Experiment Tree do not update automatically - they need to be updated by the researcher after you commit a new version of your Task/Questionnaire in the Task or Questionnaire Builder.

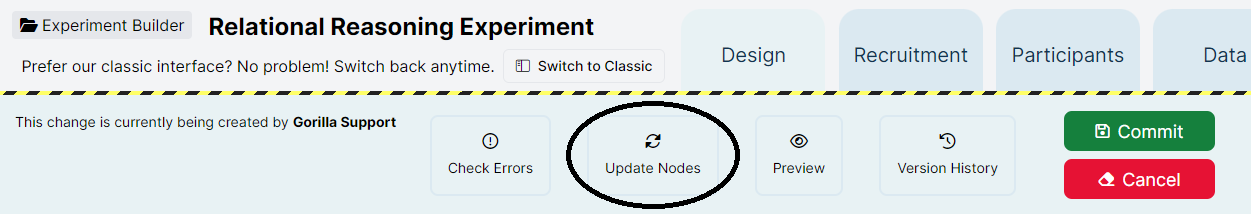

To update all the nodes in your experiment tree, you will need to follow slightly different steps depending on whether you're using New Experiment Builder or Classic Experiment Builder.

New Experiment Builder:

Click the Update Nodes button above the Experiment Tree:

A sidebar will appear with details of the nodes that need updating. To update them, click 'Update All'.

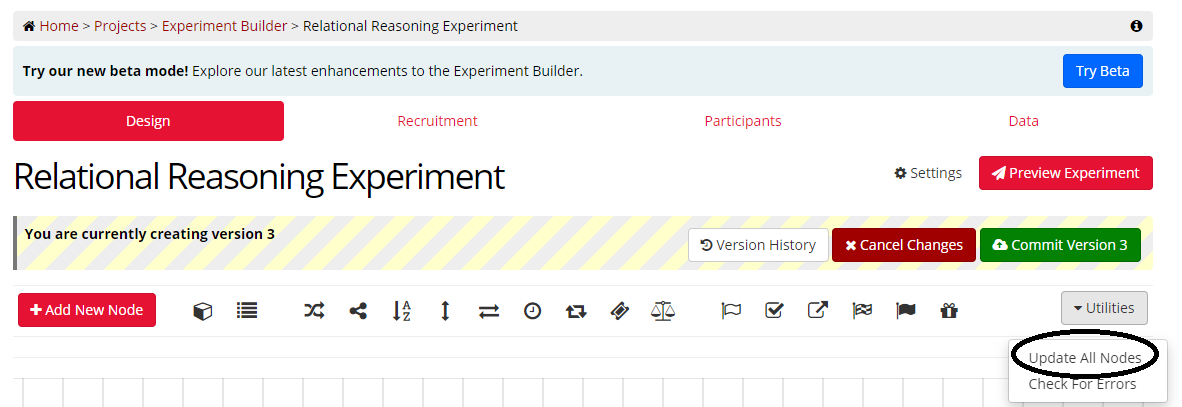

Classic Experiment Builder:

Go to the Utilities menu at the top-right and select Update All Nodes:

A window will pop up with details of the nodes that need updating. To update them, click 'Update All'.

You can also choose to update individual nodes to the latest version. This feature is not yet available in New Experiment Builder; to update individual nodes, use the 'Switch to Classic' button to switch back to Classic Experiment Builder, update the node(s), then switch back to New Experiment Builder.

To update an individual node, click on the Node, click 'Options' in the bottom left-hand corner, then 'Update to latest version'. If your Task/Questionnaire is not the latest version, an orange warning triangle will appear next to the 'Options' button.

At Gorilla, we also highly recommend previewing your experiment in full, using the Preview button in the top bar of the Experiment Builder. The Preview button will appear slightly different depending on whether you are using New Experiment Builder or Classic Experiment Builder.

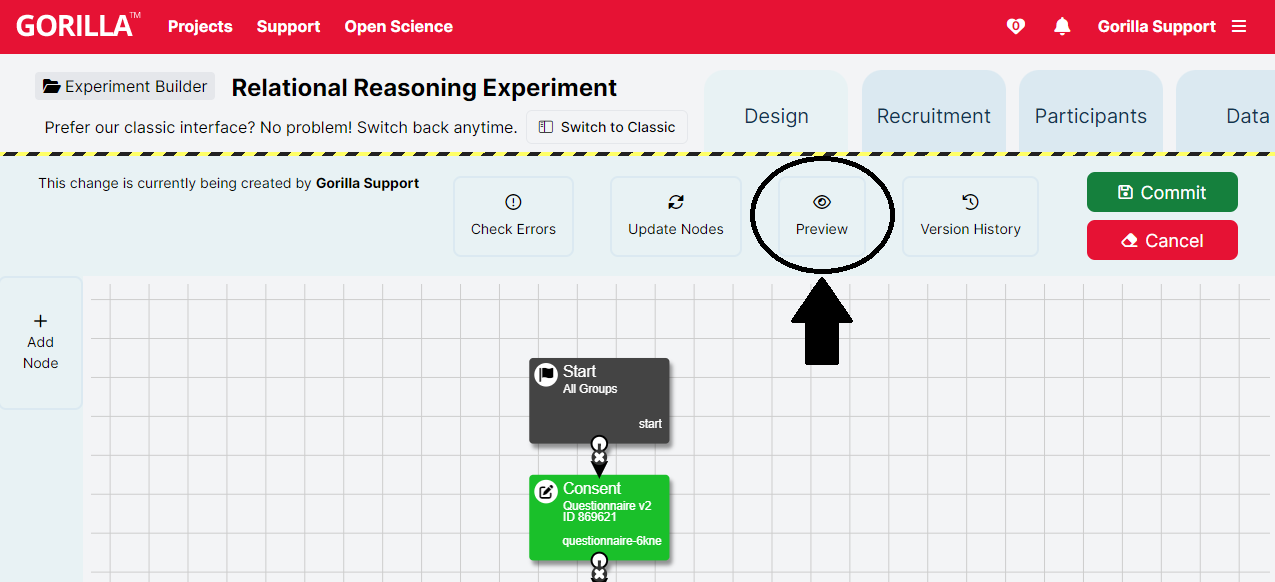

New Experiment Builder:

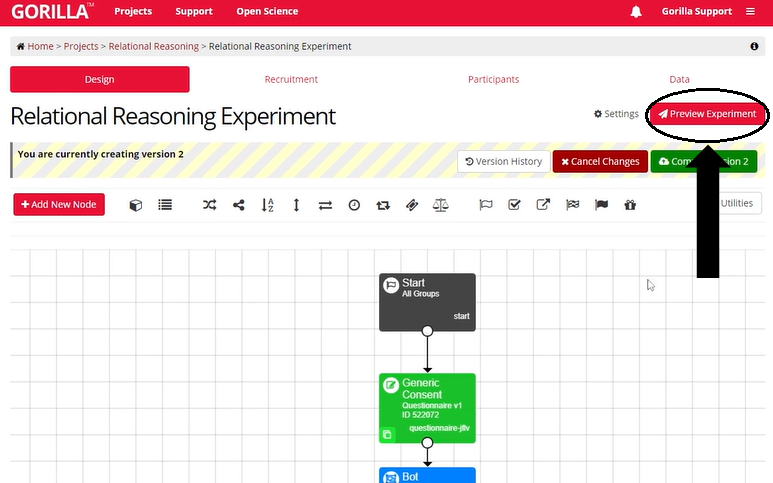

Classic Experiment Builder:

By clicking the Preview button, you can view the entire experiment from the perspective of a participant.

At the end of the preview, you will be able to download the data from all tasks and questionnaires in your experiment. You can choose to download data from the current task at any point during the preview, by going to the menu at the bottom-right and selecting 'Download CSV'. You can also use the 'Skip Task' or 'Skip Remaining Pages' option in this menu to skip on to the next node in the experiment tree.

Previewing your experiment and downloading the resulting data is a great way to check that all the participant responses are recorded in the way you expect, before launching your experiment.

If you're not seeing what you expect when you preview your experiment, check out our Troubleshooting Guide!

Once you have set up the experiment, you can commit it. Committing means saving a version of the experiment that you can always go back to. You must commit your experiment to send the most up to date version to participants.

Once participants start your experiment, they are locked in to that version. If you make any changes to your experiment after starting recruitment, only new participants who enter the experiment in the latest committed version will see these changes. You can check which version of the experiment participants completed in the Participants Tab of the Experiment Builder.

When you commit a new version of your experiment, any Randomiser, Order, Counterbalance, and Allocator Nodes reset: they start their randomisation again from scratch, without taking into account how many participants were assigned to each group in previous experiment versions. The only node that takes effect across experiment versions is the Quota Node.

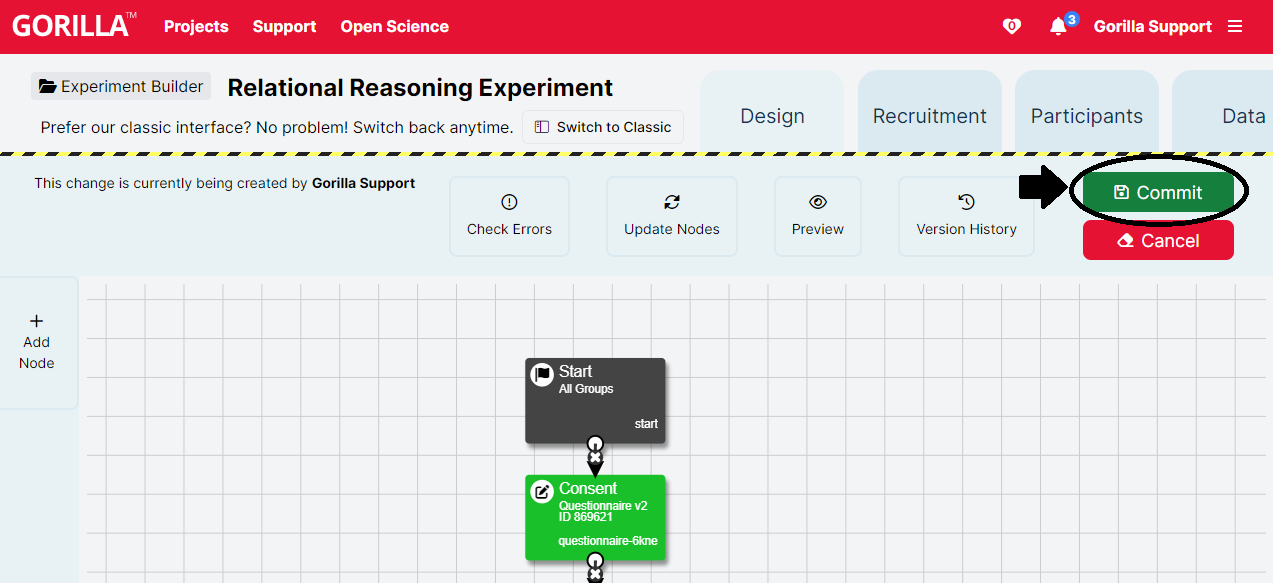

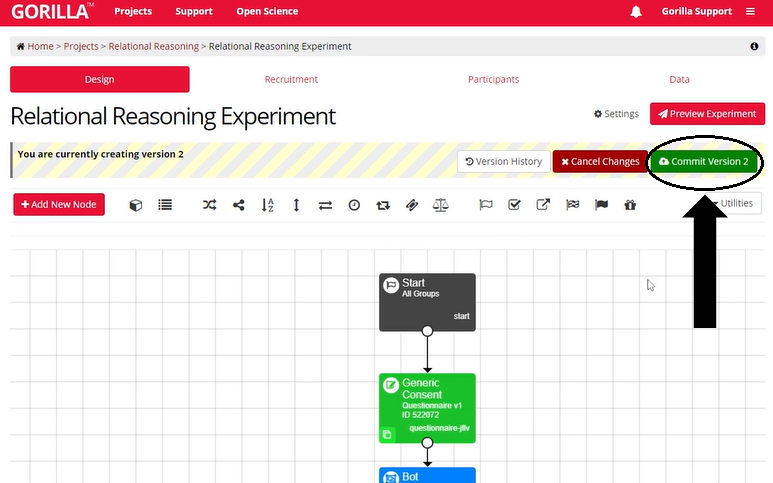

To do this, click the green 'Commit' button at the top right of the screen. The Commit button will appear slightly different depending on whether you are using New Experiment Builder or Classic Experiment Builder.

New Experiment Builder:

Classic Experiment Builder:

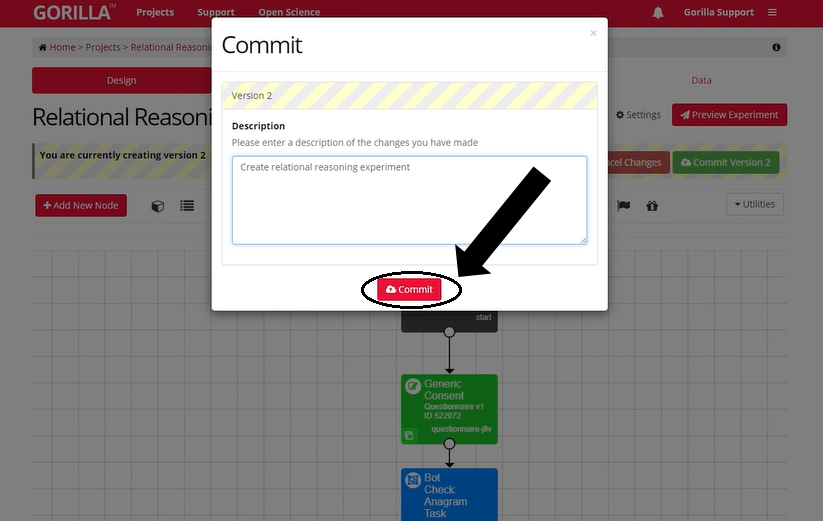

This will then open a text box where you should detail what has been created or changed in the current version of the experiment. Press the commit button at the bottom of this box to commit the new version of the experiment.

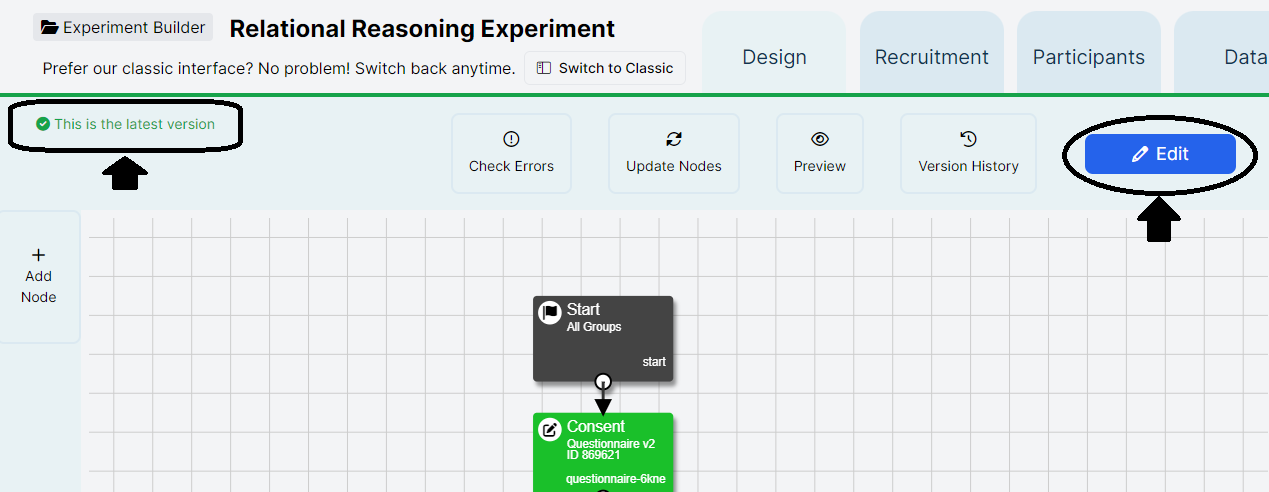

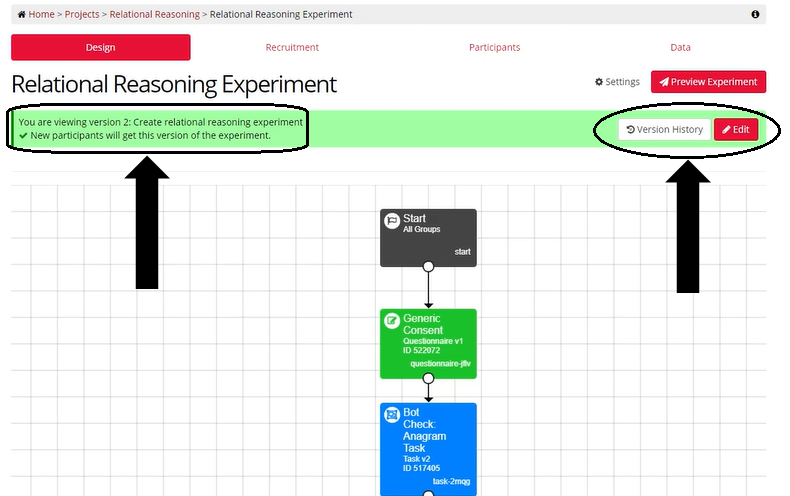

You will know the experiment version is committed when you can no longer edit the experiment. The Commit button has been replaced with the Edit button. Additionally, the striped "under construction" banner is now green. These changes indicate that we are now viewing the committed version of the experiment, not editing it.

A committed experiment appears slightly different depending on whether you are using New Experiment Builder or Classic Experiment Builder.

New Experiment Builder:

Classic Experiment Builder:

We've created a Walkthrough that will take you step-by-step through the journey of creating and launching your Experiments in Gorilla. There, you will find references to the support pages for all the crucial components that build your Projects.

Explore the From Creation to Launch Walkthrough!

Gorilla does not recruit participants for you, however, you can link an external recruitment service to your Gorilla Experiment, create a link to distribute, or invite participants you already know to participate. The recruitment section is where you configure the method by which participants will access your experiment, optionally restrict the devices, browsers or location they can take part from, and control how many participants you wish to recruit.

The recruitment policy you choose determines how participants will access your experiment. There are several options here: a simple link that you can put on your website or post to social media, uploading a CSV of email addresses and inviting them all to take part, or interfacing with other recruitment systems such as Prolific.com, MTurk, or SONA.

Go and check out our full list of recruitment policies.

The recruitment target is the number of participants you wish to recruit. All participants who are either currently live on your experiment, or marked as included on your participants page, will count towards this total.

To change the recruitment target:

To unassign tokens from an experiment and return them to your account, you can reduce the recruitment target. Note that any tokens from participants who are currently live on the experiment or have already completed the experiment cannot be unassigned.

The Recruitment Progress table on the Recruitment Tab summarises how many tokens have been consumed, are currently reserved, or are still available for your experiment. You can find a key to this table in our When Are Tokens Consumed? guide. For more general information about what happens to tokens as participants move through your experiment, see our Participant Status and Tokens guide.

Only the Project Owner can change the recruitment target settings. Only the Project Owner's tokens can be assigned to the experiment. Collaborators are not able to interact with these settings, so cannot contribute any of their own tokens or use their unlimited subscription (if they have one).

The Time Limit setting, found on your experiment recruitment page, allows you to automatically reject participants who do not complete your experiment, or who take longer to complete it than is considered reasonable.

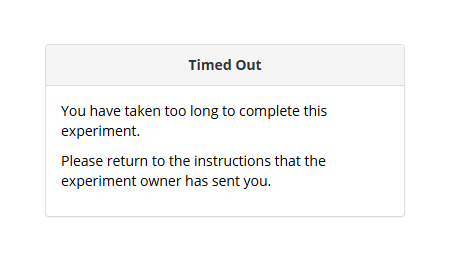

Once a Time Limit is set, in hours and minutes, participants who reach the Time Limit will be automatically rejected, but will be allowed to finish their current task, before being redirected to the Finish Node. Upon reaching the Finish Node, participants will see a Timed Out message.

You may wish to set a Time Limit because ‘Live’ participants reserve tokens, contributing towards your recruitment target. This means that participants who drop out without finishing your experiment can prevent more participants from entering your experiment until they are rejected.

Whilst you can reject participants manually, this requires monitoring your recruitment progress closely. Instead, you may choose to set a Time Limit to automate this process.

We suggest setting a Time Limit that is far longer than it could reasonably take to complete your experiment. For example, If your experiment should take 15 minutes to complete, you might set your time limit at 2 hours.

For this reason, we do not recommend using Time Limits for longitudinal studies. In a longitudinal study, the reasons for taking a long time to complete a study are much more numerous, which makes the padding you'd want to give the time limit excessively large and hard to estimate. When you can see your attrition and rejection numbers, you may wish to revise your Time Limit, and would then have to manually include the participants you’d automatically rejected.

Additionally, depending on ethics and your recruitment service, you will likely still have to pay participants who only complete the first half of your study for completing the first section, so you may wish to make use of their data.

Note: When using the Time Limit with recruitment services that offer a similar Time Limit, make sure that your Gorilla experiment Time Limit matches the time limit set in the recruitment service.

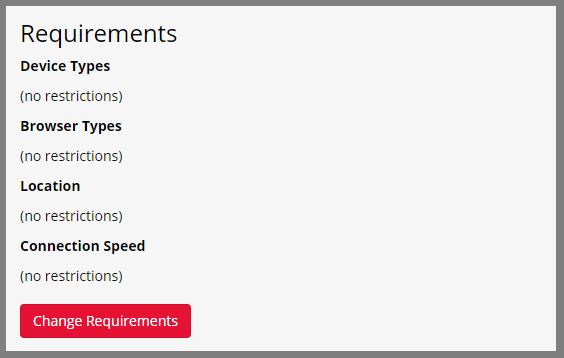

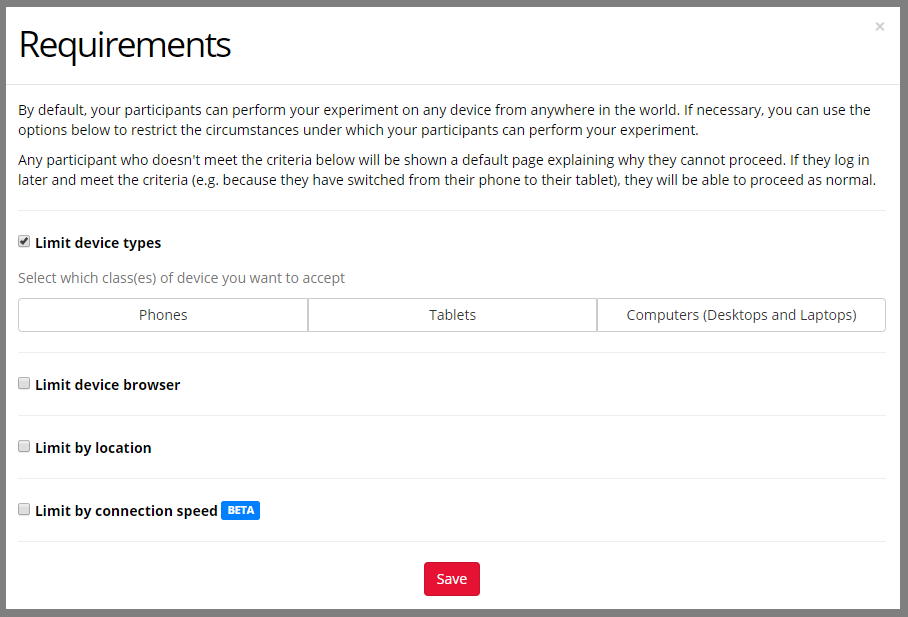

You can optionally restrict your participants by device type, connection speed, browsers or geographic location. Any participants not meeting these criteria will be shown an error message. Sometimes, this is helpful because certain aspects of tasks (e.g. displaying videos) might not function well in all countries, for low connection speeds and across all browsers. By previewing your experiment and piloting it, you can test whether you need to set any requirements.

To find out more about setting requirements, check out our Experiment Requirements guide.

Above you can see a close-up of the Requirements section of the Experiment Recruitment tab. If you have set any requirements, icons representing your requirements will appear under the headings.

It is not possible to make changes to the experiment version that live participants have already entered. Any participant who is already live on the experiment will complete the version they started.

You can make changes to your experiment while data is being collected, but only participants who start the experiment after you commit these changes will see the new version.

To make changes to your experiment while data is being collected, first edit any tasks or questionnaires within the experiment and commit your changes. Then, update your nodes in the experiment to use the new versions of the tasks or questionnaires. Finally, commit your changes to the experiment.

Once you have committed your changes to the experiment, any new participants who begin the experiment after that point will start with the new version of your experiment.

It is possible to change a recruitment policy at any time. However, switching between policies that do or don't require public IDs can cause disruption to any current participants. For example, if participants have originally been sent a simple link and the recruitment policy is subsequently changed to require a public ID or login, the link used previously will no longer work. Consider only changing the recruitment policy once a trial of the experiment has run successfully, or sending out updated invites to existing participants.

By default, participants can perform an experiment on any device from anywhere in the world. If necessary, it is possible to restrict the circumstances under which a participants can perform an experiment. These experiment requirements consist of: limiting device types to phones, tablets and/or computers; limiting to a geographical location via a 2-letter country code; limiting the browser used to Chrome, Safari, Edge, Firefox and/or Internet Explorer; and limiting to a minimum connection speed. Any participant who doesn't meet the criteria below will be shown a default page explaining why they cannot proceed. If they log in later and meet the criteria (e.g. because they have switched from their phone to their tablet), they will be able to proceed as normal.

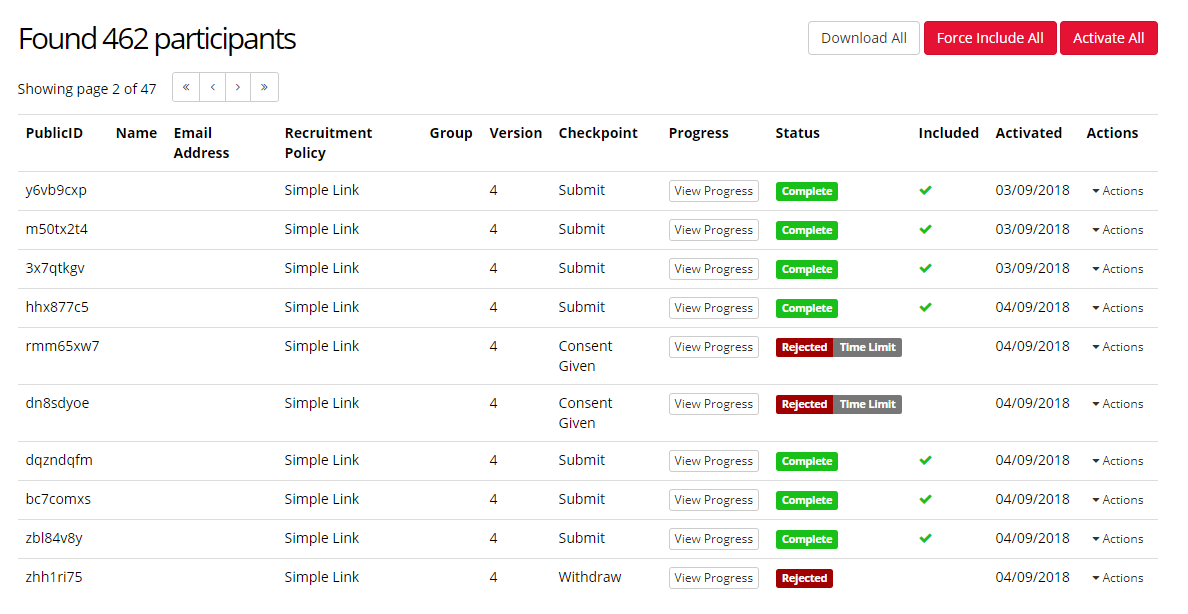

The participants screen allows you to observe and manage the participants who have been invited to, are registered for, or have completed your experiment.

Participants can also be rejected, included or deleted from this page. Explore our Participant Status and Tokens guide to learn what do different Participant Statuses mean, how they affect your tokens and how you can manipulate them when required.

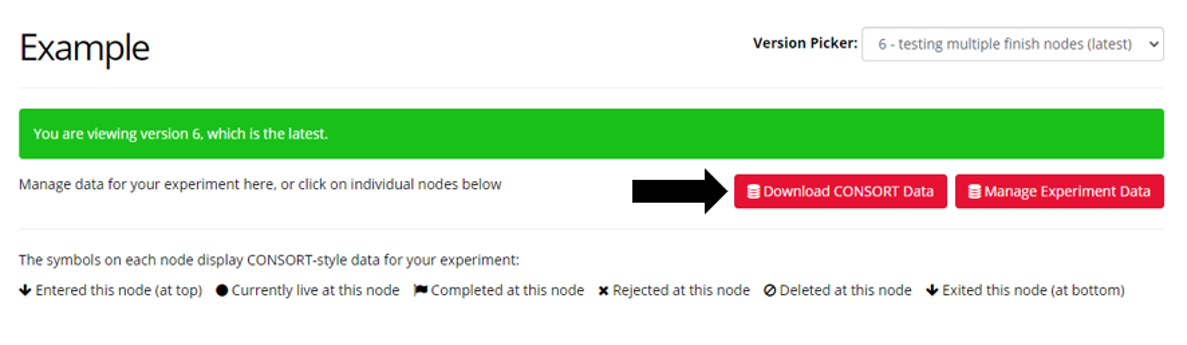

The Data tab includes information about the state of participants at each Node as a little list of icons with numbers. This means that you can see where participants have dropped out, been rejected, and gain detailed attrition data.

For example, imagine a participant has been through three nodes before being rejected. The participant will be shown as entering and exiting three nodes, and then entering the node at which they were rejected and shown as rejected at that node.

A visual example of the attrition data for each node is shown below:

The Number of Participants who have entered the node

The Number of Participants who are still live on the node

The Number of Participants who were rejected

The Number of Participants who were deleted

The Number of Participants who have exited the node

In the example above, we have 9 participants entering the node and 8 exiting, with one remaining live. It may be that the participant has left the experiment at this point, and we may wish to manually reject them. This will set the number of live participants to 0, and the number of rejected participants to 1.

When your Experiment contains an Order Node, the CONSORT data refers to the Node position rather than the Node itself. For example, if you have a Flanker Task and next a Thatcher Task connected to an Order Node, the CONSORT data for the Flanker Node will refer to the first task participants saw, whether that be the Flanker or Thatcher Task, instead of the attrition data for the Flanker Task itself.

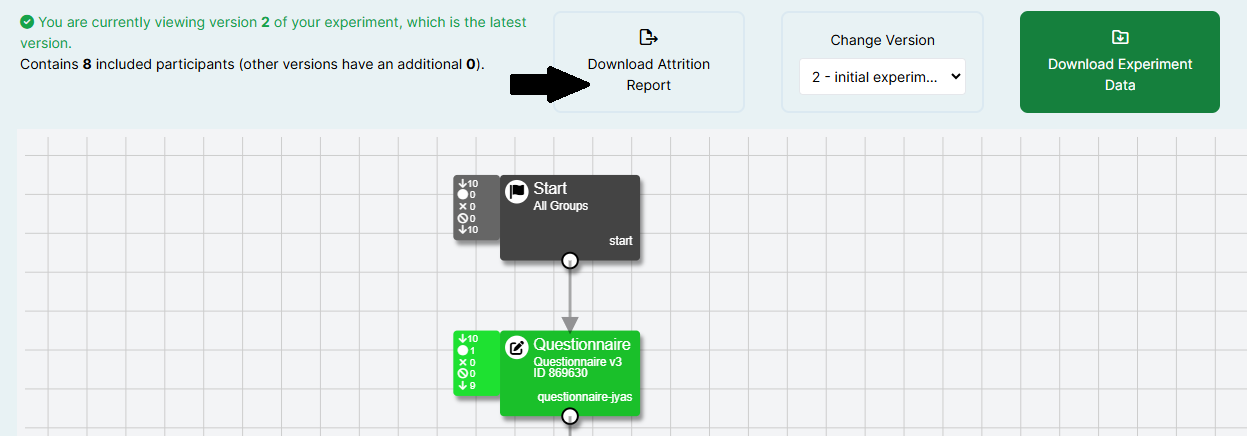

In the Data Tab of the Experiment Builder, you can download a CSV file which contains all the above information for each node in your experiment in an Attrition Report. You will need to follow slightly different steps depending on whether you’re using New Experiment Builder or Classic Experiment Builder.

New Experiment Builder:

First, select the version of your experiment for which you want to access attrition data from the Change Version dropdown. Then, click the 'Download Attrition Report' button to download this report:

Classic Experiment Builder:

First, select the version of your experiment for which you want to access attrition data from the Version Picker dropdown. Then, click the 'Download CONSORT Data' button to download this report:

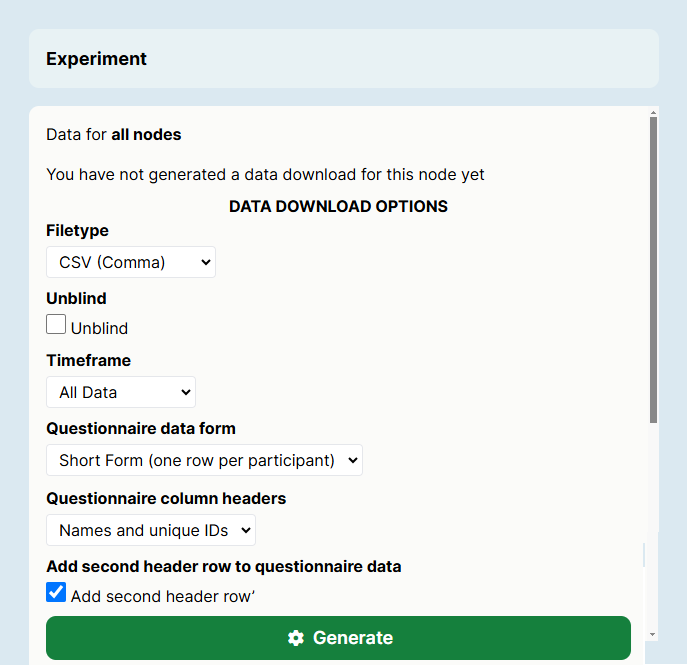

The Data tab of the Experiment Builder allows you to download data from the various Task and Questionnaire Nodes of your experiment in the form of a spreadsheet.

Data is presented in long-format, with one row per event. For questionnaires only, there is an option to instead download the data in short-format, with one row per participant. You can find more information about this in our Data guide.

The data tab looks like this:

This screenshot shows the new Experiment Builder interface. If you’re still using the classic interface, it will appear slightly different.

To download your data:

This screenshot shows the new Experiment Builder interface. If you’re still using the classic interface, it will appear slightly different.

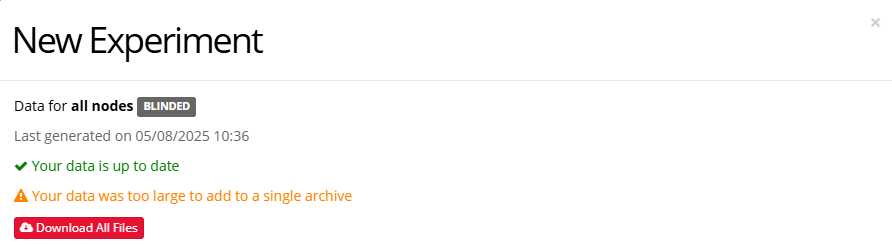

Large data sets

By default, Gorilla will zip your data files into a single archive for you to download. However, for large data sets, this can cause the generation process to stall. To prevent this, Gorilla will not create a zip archive for datasets that exceed a given size. Instead, a 'Download All Files' button will appear, allowing you to download all files with a single click:

(This screenshot shows the new Experiment Builder interface. If you’re still using the classic interface, it will appear slightly different.)

You can also download data from an individual task or questionnaire node, or from a specific participant!

To download data from an individual node, click that node in your Experiment Tree from the Data Tab. This will open the data-download menu for that specific node.

To download data from a specific participant, go to the Participants tab, find the participant, and use Actions -> Download Data. This will open the data-download menu for that participant's data.

For more information on how to access and download your data, and an in-depth explanation of the options available for questionnaire data, take a look at the Data guide. For guidance on how to preprocess and analyse your data, check out our Analysis guide.

Data that is saved to the Store is data collected from the participant's responses that can be used to alter the experiment (in real time), depending on their responses. Essentially, saving data to the Store is information you can ’carry’ from one part of your task or questionnaire to others within the same experiment.

Here are some examples of when to save data to the Store:

Learn how you can manipulate your experiment using the Store through our Binding to the Store Guide.

Randomisation is an important aspect of many experiments as it reduces bias that could impact the outcome of the study. We created some excellent documentation pages to walk you through randomisation in Gorilla.

This table presents the different randomisation options Gorilla offers, and explains in what circumstances you should use which.

| Type of randomisation | Useful for | Use Case | Location |

|---|---|---|---|

| Trial order | Within subject | If you want to present a list of trials in a random (shuffled) order in the spreadsheet. | Spreadsheet Randomisation: Randomise Trials |

| Block order | Within subject | If you have two (or more) blocks, you may want participants to be shown them in a different order. This can be achieved using the spreadsheet. | Spreadsheet Randomisation: Randomise Blocks |

| Stimuli set | Between Subject | If you want to present two different stimuli sets to participants, for instance an A and B set, then upload two different spreadsheets to the task. This can be useful for creating a pre-test and a post-test which are otherwise identical. You’d upload all the stimuli to the stimuli tab as normal. | Multiple Spreadsheets in the Task Builder |

| Subset of stimuli | Between Subject | If you want to randomly select a subset of stimuli to be shown to participants, you can select a subset of stimuli from the spreadsheet. | Spreadsheet Randomisation: Select Random Subset |

| Task Order | Within Subject | If you want to randomise the order of Task and Questionnaire Nodes within your experiment tree, use the Order Node. | Experiment Tree: Order Node |

| Experiment Group | Between Subject | If you want to distribute participants at random between 2 (or more) different paths through your experiment tree, use the Randomiser node. | Experiment Tree: Randomiser Node |

| Experiment Group | Between Subject | If you want to assign different stimuli sets to different participants, the counterbalance node can be used in conjuction with spreadsheet bindings. This is especially helpful if you have more than 4 stimuli sets, as the same effect can be achieved using many randomiser nodes, but the more stimuli sets, the more randomiser nodes you would have to use - which is where the helpfulness of the counterbalance node enters. | Experiment Tree: Counterbalance Node |

What follows is a description of some of the complexities surrounding randomisation in Gorilla.

In short, there are two types of randomisation: with replacement and without replacement. These differ in how they allocate participants to different conditions and it’s important to understand these differences.

There are also various ways that Gorilla can handle attrition!

The best way to think about random with replacement is that it is like a coin toss, or dice roll. Each coin toss is independent of what has happened before.

If we have a randomiser going to two groups, it’s like tossing a coin for each participant. On average, we would expect a 50:50 ratio of head to tails, but you could get runs of all heads by sheer (bad) luck. We are unlikely to end up with a ratio of exactly 50:50, but it will probably be close. The larger the sample, the closer the ratio will get.

This is called WITH REPLACEMENT because each time a participant is assigned a group, Heads or Tails, all options are still available for the next participant. Each allocation is independent of all previous allocations.

The best way to think about the balanced randomiser is like a deck of cards. Each draw is dependent on what has been drawn before.

The colour of the card (red or black) determines which branch participants go down. Balanced with a 2:2 ratio means we have 4 cards, 2 are black and 2 are red. Cards are handed out in a random order (BBRR). In each lot of 4 cards, we will have the 2:2 ratio exactly. For the next four participants, the process is repeated. So over 12 participants we would get 3 lots of 4 cards, with 6 Red and 6 Black. For instance, the three lots could be; BRBR then RRBB then RBBR. Importantly, consider the 1st lot. Once we’ve had BRB, the last card has to be R. There is no chance of it being anything else.

A 2:2 ratio is different from 1:1 ratio. With 1:1 there are only 2 cards, 1 red and 1 black, so there are only two possible orders (BR and RB). So over 12 participants we might get BR, RB, BR, RB, BR, BR.

A 10:10 ratio means we have 20 cards, 10 red and 10 black. We could (by chance) have a run of 10 red followed by 10 black, then 10 black and 10 red. In that unlikely event, the only times we have a balanced set of participants is at 20 and 40 Ns. For example, if we only had 15 Ns, the first 10 would be allocated to red, leaving us with only 5 allocated to black.

With a 2:2 ratio, one group can only get 2 ahead of the other group. If you have already sampled 100 participants equally into black and red and you only want to sample two more, it may be that these last two both get red cards (RRBB), leading to 50 black and 52 red.

With 1:1 ratio this goes down to 1. If you have already sampled 100 participants equally into black and red and you only want to sample two more, one will be black and one red.

With 10:10 ratio, this goes up to 10. This is important because a larger ratio increases your chance of unequal conditions if you end recruitment early.

This is called RANDOM WITHOUT REPLACEMENT because in each lot of participants (2, 4, 10) cards are dealt out without replacing the cards that have been taken by previous participants. At the end of each lot we will have dealt participants the exact ratio we set. Allocations are dependent on all previous allocations.

This table presents the different randomisation options Gorilla offers, and explains in what circumstances you should use which.

In Gorilla the Randomiser node, Order node and Counterbalance node have no knowledge of subsequent attrition. Attrition is when a participant drops out of your experiment part way through. This means that even with balanced randomisation, you may end up with unequal groups if participants drop out. Drop-out can be caused by a range of factors: participants can get bored, have their attention called elsewhere, dislike the task, or stop participating for whatever reason.

Worked example:

Scenario 1: We have a Timelimit set, no manual intervention.

6. After the appropriate time, both remaining live participants are automatically rejected.

7. Our experiment is no longer full. New participants join.

8. Gorilla generates a new block of participants (BBAA). Remember, the randomiser has no knowledge of subsequent attrition.

9. The first participant starts and immediately drops out handing back their token. That’s the first B of our new BBAA lot used.

10. The next participant who joins takes up the next B on our BBAA lot and goes down the B branch and completes the task.

11. The next participant goes down the A branch and completes the task.

Summary: At the end we have 12 completes, 5 on the A branch and 7 on the B branch.

We might report this as: 12 participants randomised to condition (5 condition A, 7 condition B) completed the study. 14 participants were recruited overall, 2 participants dropped out of the A condition.

Scenario 2: We don’t have a Timelimit set, manual intervention.

6. Gorilla has sent us an email saying our experiment is full. We leave it 2 hours, so allow people to complete. After this time we are happy to manually reject Live participants as they have probably dropped out.

7. We look at our participants dashboard and see that we have 6 completes on the B branch and 4 completes on the A branch.

8. We edit our experiment and change the randomiser ratio from A2:B2 to A2:B0.

9. We reject the two live participants.

10. Recruitment resumes, and we get two participants that are sent down the A branch by our new randomiser ratio and complete the task.

Summary: At the end we have 12 completes, 6 on the A branch and 6 on the B branch.

We report this as 12 participants were randomly assigned to groups and recruited until there were 6 participants that completed each condition.

Conclusion

In scenario 2, we ended up with the ideal number of participants in each condition. But we have lost information about attrition. In scenario 1 we end up with small differences in group size, but we have the information about attrition. Neither are ideal, but good science is often about compromises.

Before we look at possible solutions, let's take a deeper look at attrition and why it is important.

To take an extreme example, imagine we have two groups, one with a positive mood induction (kittens and puppies) and one with a negative mood induction (spiders and snakes) followed by a probabilistic discounting task. Our hypothesis is that a poor mood makes people favour money now over money later, whereas a good mood pushes this horizon further out.

In an ideal condition, we’d recruit 50 participants to each group and they would all complete the experiment. But this is the real world, and that’s unlikely to happen. Importantly, we'd probably expect a lot more attrition in the negative mood group.

Three scenarios can happen next.

Scenario 1: We have a Timelimit set, no manual intervention.

By the time we have 100 complete datasets, our methods section might read as follows:

100 participants completed the study. They were randomised to conditions (70 in condition A, 30 in condition B). Overall, 200 participants were recruited; 10 participants dropped out of condition A and 90 dropped out of condition B.

This tells the reader that we had significant asymmetric attrition and that the negative mood induction group are probably systematically different to the positive mood induction group. They have self-selected for being less sensitive to spiders and snakes.

Scenario 2: We have don't a Timelimit set, manual intervention.

100 participants were randomly assigned to groups and recruited until there were 50 participants that completed each condition.

The asymmetric attrition information is hidden from the reader.

Scenario 3: Recruit in 3 phases changing the randomiser ratio.

An alternative, and elegant approach is to split our recruitment into 2 phases.

In phase 1 we collect 50 Ns worth of data at a 4:4 ratio. We then determine the attrition rate in each group and adjust the ratios accordingly.

In phase 2 we collect 50 Ns worth of data at the 4:8 or 4:12 ratio – whatever fits our attrition rate. By doing so we aim to get both groups to 50 completes at about the same time.

In Gorilla we have Quota Nodes, which are attrition-sensitive. This allow us to set a quota on a branch of our experiment. Once the quota is full, participants will no longer be sent down that branch. This will give us the effect of setting randomiser branches to 0 once we have enough participants for that condition without the need for manual intervention. If a participant is rejected part-way through an experiment (after having passed through the Quota node), this participant will not count towards the set Quota. The Quota node will allow another participant to enter through that Quota in their place.

This feature should be used with care so that we don’t hide asymmetric attrition from ourselves or others.

The Allocator Node combines Randomiser and Quota nodes and therefore allows them to be sensitive to subsequent attrition.

Going back to the original example:

Note: The Allocator node does not account for how many people have gone through each branch across previous experiment versions. If you edit your experiment during recruitment, this will create a new version of the experiment. The Allocator node will reset for each new version of the experiment.

If you want to run a longitudinal or multi-part study there are a few more aspects you'll need to consider, particularly when building your experiment tree and choosing a recruitment policy. It's best to read through this page thoroughly to make sure you've set everything up properly.

If you're running a longitudinal study, you don't need to wait until participants have completed all the stages before accessing their data! By manually including participants while they are still taking part, you can immediately access the data they have generated so far, and then access future data as soon as it is ready without having to consume another token. Simply regenerate your data once the participant has completed the next stage of your study, and you will be able to download all the data they have produced so far at no extra cost.

You can use one experiment tree for your whole study, and make good use of the Delay Node and Checkpoint Node.

For some recruitment platforms, such as Prolific, you can use the Redirect Node instead of the Delay Node to send participants externally to the recruitment platform at the end of each session. You can add a message to participants when they reach a Redirect Node, and also set a delay to prevent them returning too early. When you invite them back through the recruitment platform on the next day of participation, they can pick up where they left off in the experiment tree.

If you will be using Prolific for a longitudinal experiment, we recommend using their Gorilla Integration Guide FAQ "How do I set up a longitudinal (multi-part) study on Gorilla and Prolific" (scroll to the bottom of the linked page).

Once participants start your experiment they are locked to that version. If you make any changes to your experiment after starting recruitment, only new participants who enter the experiment in the latest committed version will see these changes. You can check which version of the experiment participants completed in the Participants Tab of the Experiment Builder.

You will need a way to identify your participants when they return for the next session of your experiment, to prevent Gorilla processing them as a new participant. This will also allow participants to resume the experiment where they left off in the previous session, because Gorilla will be able to identify them when they return. Fortunately, many of our recruitment policies (as well as external recruitment providers such as Prolific) allow you to do this with ease.

This is easiest to achieve using an ID-based recruitment policy, which allows your participants to return by logging in (e.g. Supervised or Email ID) or clicking (e.g. Email Shot) their personalised link. These recruitment policies prevent participants entering your study more than once, and allows them to continue later after completing part of the experiment. Most external participant recruitment services will assign each participant a unique ID, and so Gorilla will assume that two entries into the study with the same ID are the same person and therefore won't attempt to process them as new participants. If the participant returns with the same ID, they will continue on in the experiment tree at the next node from where they left off.

Note: We don't recommend you use Simple Link with a Delay Node because, by default, no reminder email will be sent to participants and they won't be able to log back in again where left off. If you do decide to use Simple Link for any reason, you must check Send Reminder and Reminder Form in the configuration settings of the Delay Node. This will require collecting participants' email addresses, so make sure you have ethical clearance for this first.

Longitudinal studies are likely to have higher attrition rates than studies that can be completed all in one sitting. You can monitor your participant status in the Participants tab of the Experiment Tree to track which participants have yet to return for a new session of your experiment. Doing this in combination with a Checkpoint Node (see above) makes this even easier.

In the above example, the Participants tab shows which Checkpoint each participant has passed through. Today, on Day 3 of the study, we expect everyone to have passed through Session 3 and be marked as "Complete", but two participants are still "Live". The first participant has passed through the "Session 1" Checkpoint and did not return for Session 2, so we might want to use this information to reject them from participating further. Another participant is due to complete Session 3 today but hasn't returned yet, so they might need a nudge to come back and finish the study before the end of the day. From the Participants screen, it's very easy to see the status of all your participants so that you can manage attrition.

For insights and top tips from real researchers, check out talks on multi-session studies from BeOnline:

'A (half) marathon not a sprint: Multi-session memory studies online' (Emma James)

For general troubleshooting advice visit our Troubleshooting Guide.

If you don't find an answer to your question reach out to our friendly support team via the Contact Form - we are happy to help!

To ensure your participants are real humans and not bots, we have a collection of Sample Bot Check tasks on our samples page.

You can choose from a variety of pre-created tasks that can be placed in the experiment tree and act as bot checks to help ease your mind about the quality of data collected.